If you ever feel cynical about human beings, a good antidote is to talk to artificial-intelligence researchers. You might expect them to be triumphalist now that AI systems match or beat humans at recognizing faces, translating languages, playing board and arcade games, and remembering to use the turn signal. To the contrary, they’re always talking about how marvelous the human brain is, how adaptable, how efficient, how infinite in faculty. Machines still lack these qualities. They’re inflexible, they’re opaque and they’re slow learners, requiring extensive training. Even their well-publicized successes are very narrow.

Many AI researchers got into the field because they want to understand, reproduce and ultimately surpass human intelligence. Yet even those with more practical interests think that machine systems should be more like us. A social media company training its image recognizers, for example, will have no trouble finding cat or celebrity pictures. But other categories of data are harder to come by, and machines could solve a wider range of problems if they were quicker-witted. Data are especially limited if they involve the physical world. If a robot has to learn to manipulate blocks on a table, it can’t realistically be shown every single arrangement it might encounter. Like a human, it needs to acquire general skills rather than memorizing by rote.

In getting by with less input, machines also need to be more forthcoming with output. Just the answer isn’t enough; people also want to know the reasoning, especially when algorithms pass judgment on bank loans or jail sentences. You can interrogate human bureaucrats about their biases and conflicts of interest; good luck doing that with today’s AI systems. In 2018 the European Union gave its citizens a limited right to an explanation for any judgment made by automated processing. In the U.S., the Defense Advanced Research Projects Agency funds an “Explainable AI” research program because military commanders would rather not send soldiers into battle without knowing why.

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

A huge research community tackles these problems. Ideas abound, and people debate whether a more humanlike intelligence will require radical retooling. Yet it’s remarkable how far researchers have gone with fairly incremental improvements. Self-improvement, imagination, common sense: these seemingly quintessential human qualities are being incorporated into machines, at least in a limited way. The key is clever coaching. Guided by human trainers, the machines take the biggest steps themselves.

Deep networks

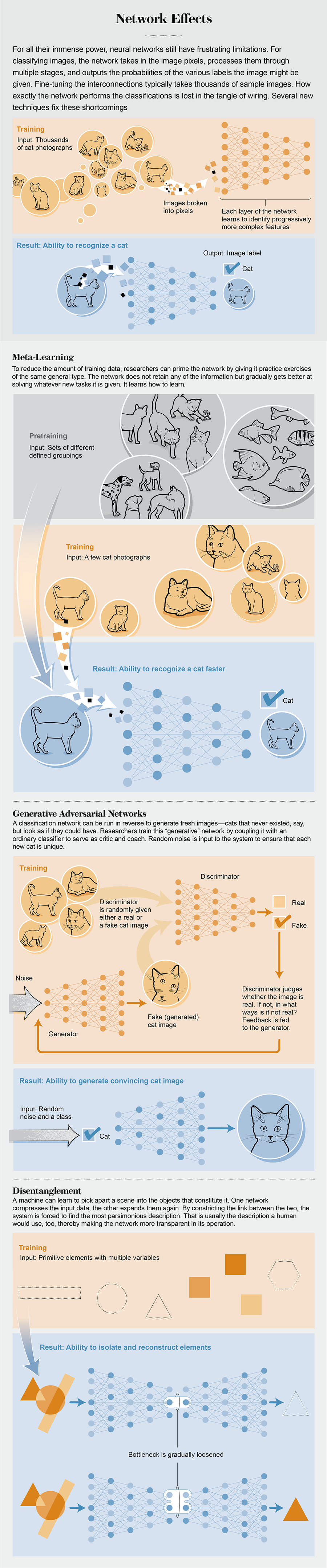

More than most fields of science and engineering, AI is highly cyclical. It goes through waves of infatuation and neglect, and methods come in and out of fashion. Neural networks are the ascendant technology. Such a network is a web of basic computing units: “neurons.” Each can be as simple as a switch that toggles on or off depending on the state of the neurons it is connected to. The neurons typically are arrayed in layers. An initial layer accepts the input (such as image pixels), a final layer produces the output (such as a high-level description of image content), and the intermediate, or “hidden,” layers create arithmetic combinations of the input. Some networks, especially those used for problems that unfold over time, such as language recognition, have loops that reconnect the output or the hidden layers to the input.

A so-called deep network has tens or hundreds of hidden layers. They might represent midlevel structures such as edges and geometric shapes, although it is not always obvious what they are doing. With thousands of neurons and millions of interconnections, there is no simple logical path through the system. And that is by design. Neural networks are masters at problems not amen-able to explicit logical rules, such as pattern recognition.

Crucially, the neuronal connections are not fixed in advance but adapt in a process of trial and error. You feed the network images labeled “dog” or “cat.” For each image, it guesses a label. If it is wrong, you adjust the strength of the connections that contributed to the erroneous result, which is a straightforward exercise in calculus. Starting from complete scratch, without knowing what an image is, let alone an animal, the network does no better than a coin toss. But after perhaps 10,000 examples, it does as well as a human presented with the same images. In other training methods, the network responds to vaguer cues or even discerns the categories entirely on its own.

Remarkably, a network can sort images it has never seen before. Theorists are still not entirely sure how it does that, but one factor is that the humans using the network must tolerate errors or even deliberately introduce them. A network that classifies its initial batch of cats and dogs perfectly might be fudging: basing its judgment on unreliable cues and variations rather than on essential features.

This ability of networks to sculpt themselves means they can solve problems that their human designers have no idea how to solve. And that includes the problem of making the networks even better at what they do.

Credit: Brown Bird Design

Going meta

Teachers often complain that students forget everything over the summer. In lieu of making vacations shorter, they have taken to loading them up with summer homework. But psychologists such as Robert Bjork of the University of California, Los Angeles, have found that forgetting is not inimical to learning but essential to it. That principle applies to machine learning, too.

If a machine learns a task, then forgets it, then learns another task and forgets it, and so on, it can be coached to grasp the common features of those tasks, and it will pick up new variants faster. It won’t have learned anything specific, but it will have learned how to learn—what researchers call meta-learning. When you do want it to retain information, it’ll be ready. “After you’ve learned to do 1,000 tasks, the 1,001st is much easier,” says Sanjeev Arora, a machine-learning theorist at Princeton University. Forgetting is what puts the meta into meta-learning. Without it, the tasks all blur together, and the machine can’t see their overall structure.

Meta-learning gives machines some of our mental agility. “It will probably be key to achieving AI that can perform with human-level intelligence,” says Jane Wang, a computational neuroscientist at Google’s DeepMind in London. Conversely, she thinks that computer meta-learning will help scientists figure out what happens inside our own head.

In nature, the ultimate meta-learning algorithm is Darwinian evolution. In a protean environment, species are driven to develop the ability to learn rather than rely solely on fixed instincts. In the 1980s AI researchers used simulated evolution to optimize software agents for learning. But evolution is a random search that goes down any number of dead ends, and in the early 2000s researchers found ways to be more systematic and therefore faster. In fact, with the right training regimen, any neural network can learn to learn. As with much else in machine learning, the trick is to be very specific about what you want. If you want a network to learn faces, you should present it with a series of faces. By analogy, if you want a network to learn how to learn, you should present it with a series of learning exercises.

In 2017 Chelsea Finn of the University of California, Berkeley, and her colleagues developed a method they called model-agnostic meta-learning. Suppose you want to teach your neural network to classify images into one of five categories, be it dog breeds, cat breeds, car makes, hat colors, or what have you. In normal learning, without the “meta,” you feed in thousands of dog images and tweak the network to sort them. Then you feed in thousands of cats. That has the unfortunate side effect of overriding the dogs; taught this way, the machine can perform only one classification task at a time.

In model-agnostic meta-learning, you interleave the categories. You show the network just five dog images, one of each breed. Then you give it a test image and see how well it classifies that dog—probably not very well after five examples. You reset the network to its starting point, wiping out whatever modest knowledge of dogs it may have gained. But—this is the key step—you tweak this starting point to do better next time. You switch to cats—again, just one sample of each breed. You continue for cars, hats, and so on, randomly cycling among them. Rotate tasks and quiz often.

The network does not master dogs, cats, cars or hats but gradually learns the initial state that gives it the best head start on classifying anything that comes in fives. By the end, it is a quick study. You might show it five bird species: it gets them right away.

Finn says the network achieves this acuity by developing a bias, which, in this context, is a good thing. It expects its input data to take the form of an image and prepares accordingly. “If you have a representation that’s able to pick out the shapes of objects, the colors of objects and the textures and is able to represent that in a very concise way, then when you see a new object, you should be able to very quickly recognize that,” she says.

Finn and her colleagues also applied their technique to robots, both real and virtual. In one experiment, they gave a four-legged robot a series of tasks to run in various directions. Going through meta-learning, the robot surmised that the common feature of those tasks was to run, and the only question was: Which way? So the machine prepared by running in place. “If you’re running in place, it’s going to be easier to very quickly be adapted to running forward or running backward because you’re already running,” Finn says.

This technique, like related approaches by Wang and others, does have its limitations. Although it reduces the amount of sample data needed for a given task, it still requires a lot of data overall. “Current meta-learning methods require a very large amount of background training,” says Brenden Lake, a cognitive scientist at New York University, who has become a leading advocate for more humanlike AI. Meta-learning is also computationally demanding because it leverages what can be very subtle differences among tasks. If the problems are not sufficiently well defined mathematically, researchers must go back to slower evolutionary algorithms. “Neural networks have made progress but are still far from achieving humanlike concept learning,” Lake says.

Things that never were

Just what the Internet needed: more celebrity pictures. Over the past couple of years a new and strange variety of them has flooded the ether: images of people who never actually existed. They are the product of a new AI technology with an astute form of imagination. “It’s trying to imagine photos of new people who look like they could plausibly be a celebrity in our society,” says Ian J. Goodfellow of Google Brain in Mountain View, Calif. “You get these very realistic photos of conventionally attractive people.”

Imagination is fairly easy to automate. You can basically take an image-recognition, or “discriminative,” neural network and run it backward, whereupon it becomes an image-production, or “generative,” network. A discriminator, given data, returns a label such as a dog’s breed. A generator, given a label, returns data. The hard part is to ensure the data are meaningful. If you enter “Shih Tzu,” the network should return an archetypal Shih Tzu. It needs to develop a built-in concept of dogs if it is to produce one on demand. Tuning a network to do so is computationally challenging.

In 2014 Goodfellow, then finishing his Ph.D., hit on the idea of partnering the two types of network. A generator creates an image, a discriminator compares it with data and the discriminator’s nitpicking coaches the generator. “We set up a game between two players,” Goodfellow says. “One of them is a generator network that creates images, and the other one is a discriminator network that looks at images and tries to guess whether they’re real or fake.” The technique is known as generative adversarial networks.

Initially the generator produces random noise—clearly not an image of anything, much less the training data. But the discriminator isn’t very discriminating at the outset. As it refines its taste, the generator has to up its game. So the two egg each other on. In a victory of artist over critic, the generator eventually reproduces the data in enough verisimilitude that the discriminator is reduced to guessing at random whether its output is real or not.

The procedure is fiddly, and the networks can get stuck creating unrealistic images or failing to capture the full diversity of the data. The generator, doing the minimum necessary to fool the discriminator, might always place faces against the same pink background, for example. “We don’t have a great mathematical theory of why some models nonetheless perform well, and others perform poorly,” Goodfellow says.

Be that as it may, few other techniques in AI have found so many uses so quickly, from analyzing cosmological data to designing dental crowns. Anytime you need to imbibe a data set and produce simulated data with the same statistics, you can call on a generative adversarial network. “You just give it a big bunch of pictures, and you say, ‘Can you make me some more pictures like them?’” says Kyle Cranmer, an N.Y.U. physicist, who has used the technique to simulate particle collisions more quickly than solving all the quantum equations.

One of the most remarkable applications is Pix2Pix, which does almost any kind of image processing you can dream of. For instance, a graphics app such as Photoshop can readily reduce a color image to gray scale or even to a line drawing. Going the other way takes a lot more work—colorizing an image or drawing requires making creative choices. But Pix2Pix can do that. You give it some sample pairs of color images and line drawings, and it learns to relate the two. At that point, you can give it a line drawing, and it will fill in an image, even for things that you didn’t originally train it on.

Other projects replace competition with cooperation. In 2017 Nicholas Guttenberg and Olaf Witkowski, both at the Earth-Life Science Institute in Tokyo, set up a pair of networks and showed them some mini paintings they had created in various artistic styles. The networks had to ascertain the style, with the twist that each saw a different portion of the artwork. So they had to work together, and to do that, they had to develop a private language—a simple one, to be sure, but expressive enough for the task at hand. “They would find a common set of things to discuss,” Guttenberg says.

Networks that teach themselves to communicate open up new possibilities. “The hope is to see a society of networks develop language and teach skills to one another,” Guttenberg says. And if a network can communicate what it does to another of its own kind, maybe it can learn to explain itself to a human, making its reasoning less inscrutable.

Learning common sense

The most fun part of an AI conference is when a researcher shows the silly errors that neural networks make, such as mistaking random static for an armadillo or a school bus for an ostrich. Their knowledge is clearly very shallow. The patterns they discern may have nothing to do with the physical objects that compose a scene. “They lack grounded compositional object understanding that even animals like rats possess,” says Irina Higgins, an AI researcher at DeepMind.

In 2009 Yoshua Bengio of the University of Montreal suggested that neural networks would achieve some genuine understanding if their internal representations could be disentangled—that is, if each of their variables corresponded to some independent feature of the world. For instance, the network should have a position variable for each object. If an object moves, but everything else stays the same, just that one variable should change, even if hundreds or thousands of pixels are altered in its wake.

In 2016 Higgins and her colleagues devised a method to do that. It works on the principle that the real set of variables—the set that aligns with the actual structure of the world—is also the most economical. The millions of pixels of an image are generated by a relatively few variables combined in multitudinous ways. “The world has redundancy—this is the sort of redundancy that the brain can compress and exploit,” Higgins says. To reach a parsimonious description, her technique does the computational equivalent of squinting—deliberately constricting the network’s capacity to represent the world, so it is forced to select only the most important factors. She gradually loosens the constriction and allows it to include lesser factors.

In one demonstration, Higgins and her colleagues constructed a simple “world” for the network to dissect. It consisted of heart, square and oval shapes on a grid. Each could be one of six different sizes and oriented at one of 20 different angles. The researchers presented all these permutations to the network, whose goal was to isolate the five underlying factors: shape, position along the two axes, orientation and size. At first, they allowed the network just a single factor. It chose position as most important, the one variable without which none of the others would make much sense. In succession, the network added the other factors.

To be sure, in this demonstration the researchers knew the rules of this world because they had made it themselves. In real life, it may not be so obvious whether disentanglement is working or not. For now that assessment still takes a human’s subjective judgment.

Like meta-learning and generative adversarial networks, disentanglement has lots of applications. For starters, it makes neural networks more understandable. You can directly see their reasoning, and it is very similar to human reasoning. A robot can also use disentanglement to map its environment and plan its moves rather than bumbling around by trial and error. Combined with what researchers call intrinsic motivation—in essence, curiosity—disentanglement guides a robot to explore systematically.

Furthermore, disentanglement helps networks to learn new data sets without losing what they already know. For instance, suppose you show the network dogs. It will develop a disentangled representation specific to the canine species. If you switch to cats, the new images will fall outside the range of that representation—the type of whiskers will be a giveaway—and the network will notice the change. “We can actually look at how the neurons are responding, and if they start to act atypically, then we should probably start learning about a new data set,” Higgins says. At that point, the network might adapt by, for example, adding extra neurons to store the new information, so it won’t overwrite the old.

Many of the qualities that AI researchers are giving their machines are associated, in humans, with consciousness. No one is sure what consciousness is or why we have a vivid mental life, but it has something to do with our ability to construct models of the world and of ourselves. AI systems need that ability, too. A conscious machine seems far off, but could today’s technologies be the baby steps toward one?